Ray Kurzweil’s „The Singularity is Near“ highlights the importance and urgency of understanding the intersection of AI, ethics, human evolution, design, and also military technologies.

It raises both technological and ethical questions and in the context Kurzweil’s prediction that an AI would pass the Turing Test by 2029, the development and capabilities of AI models like ChatGPT-40 become particularly relevant. He confirmed his 2007 prediction to be still accurate as recently as his appearance at SXSW on March 10th, 2024 (SXSW, 2024). He even argued that some might see the Turing Test as already passed by ChatGPT-4. Although, it might have to be made dumber to fool those experts who would suspect AI if all fields were answered as proficiently as ChatGPT-4 can, he argued. The Turing Test, is a benchmark for AI designed to evaluate a machine’s ability to exhibit intelligent behavior indistinguishable from that of a human.

1. Photo: Harrison H. (Jack) Schmitt / NASA AS17-140-21391, used under fair use for educational purposes / Apollo 17 / This is the best of the portraits that Jack took of Gene (Eugene A. Cernan) at the start of EVA-3. Jack, with the South Massif behind him, is clearly visible in Gene’s face plate.

2. Illustration: Richard Koch / Photobashing (Photoshop) 2010 / This is work I did quite some time ago when there were no generative tools. It took about two full days to research, retouch the found material e.g., a U2-pressure suit helmet, and compose the final image.

3. Illustration: Richard Koch / Midjourney / It took about 1 minute for me to think about the desired outcome and the prompt, 30 seconds for Midjourney to generate the image itself, and five minutes for me to touch it up in Photoshop.

As we approach 2029, the evolution of AI showcases rapid advancements in language processing, decision-making, and learning capabilities that bring us ever closer to passing this test. AI begins to fulfill roles requiring complex human-like interaction and we will need to critically assess the ethical dimensions such as transparency, predictability, and the moral status of AI, ensuring these systems enhance societal well-being without infringing on human autonomy or dignity.

Military technologies have often catalyzed technological innovations and design revolutions. Military developments have not only revolutionized warfare but also influenced design in popular culture: as seen in the similarity between the Boeing B-29’s cockpit and the Millennium Falcon from Star Wars (images 4, 5). Visual and functional characteristics travel from military applications to civilian use and vice versa, which begs the question: what influences what? A Catch-22 problem.

4. B-29 cockpit and fuselage assembly / FPG/Getty Images, used under fair use for educational purposes.

5. Photo: Pete Vilmur, used under fair use for educational purposes / Harrison Ford and Peter Mayhew in the cockpit of the Millennium Falcon in the making of the scene forStar Wars Holiday Special

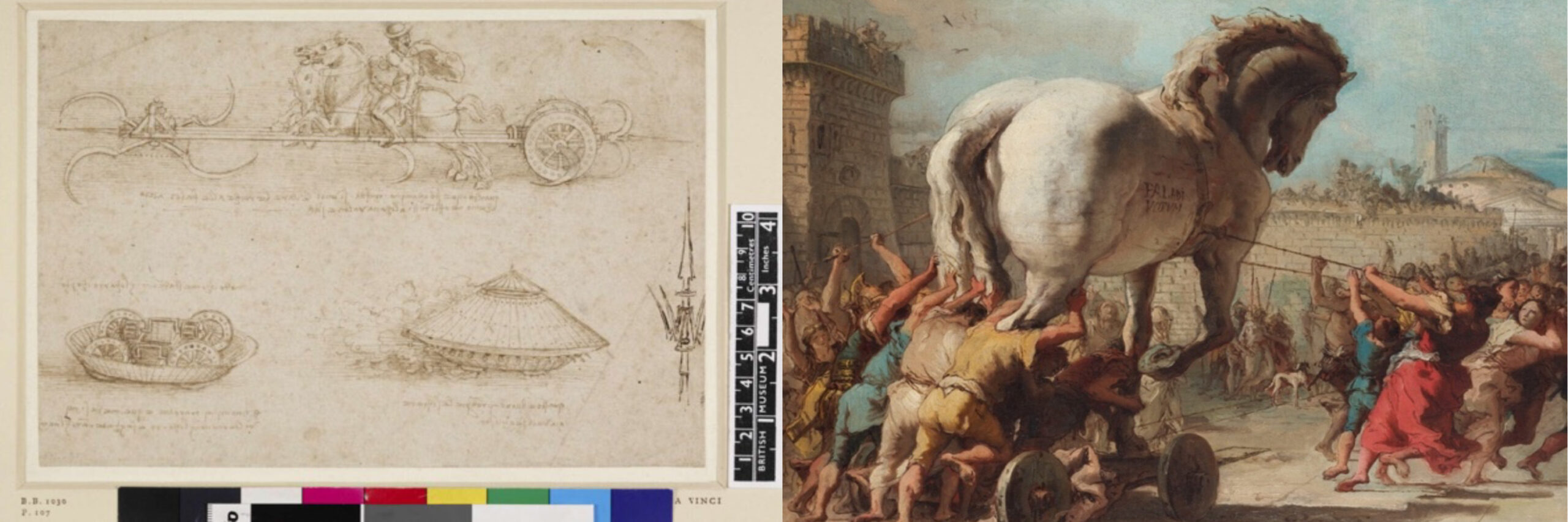

Leonardo da Vinci’s designs, from civil engineering to military technologies like tanks (image 6) and siege engines, demonstrate the intertwining of creativity and military needs. Wernher von Braun started out with V2 rockets for the Nazis which evolved into Redstone and eventually Saturn rockets that enabled travelling to the moon.

The Trojan Horse (image 7) exemplifies military deception. This ancient tactic has evolved into modern stealth technology, exemplified by the first production stealth aircraft used in the Gulf War: the Lockheed F-117 Nighthawk. The far end of this spectrum: cyber warfare. Something I was not too aware of before my visit to the NATO Cooperative Cyber Defence Centre of Excellence (CCDCOE) in Tallinn.

6. Leonardo da Vinci / Studies of military tank-like machines; including one at the top with horses pulling a contraption with revolving scythes Pen and brown ink, over stylus (some drawn with a ruler or a compass) / Inscription content: Inscribed with notes in reverse: Popham & Pouncey 1950 Inscribed under top machine: ‚quando questo chamina tra sua si vuol levare le roche alle falci acciò / che no ne offendessi alcuna volta e sua‘. Under bottom right machine: ‚questo e buono per ronpre le schiere / ma vuol seghuito‘. Above bottom 1. machine: ‚modo chome sta dentro il charro choperto‘; below it: ‚8 huomini trarrano e que medesimj / volteranno il charro e seghuiteranno il nemi[co]‘ (end of inscription trimmed off). © The Trustees of the British Museum, used under fair use for educational purposes.

7. Giovanni Domenico Tiepolo – The Procession of the Trojan Horse in Troy / circa 1760 / National Gallery, London / https://www.nationalgallery.org.uk/paintings/giovanni-domenico-tiepolo-the-procession-of-the-trojan-horse-into-troy / used under fair use for educational purposes.

With the integration of AI into this feedback loop, new possibilities will be developed. Algorithms that analyze user data could create personalized products and designs perfectly tailored to individual needs. This allows for a mode of production that is no longer based on mass production but on single-unit customization (lot size: 1) – similar to the concepts of von Neumann machines that can self-replicate.

Kurzweil’s vision of the technological singularity, where AI surpasses human intelligence, underscores the urgency of this development. AI could mark the next significant step in our evolution by enabling us to transcend the limitations of our biological bodies. Yet, this raises important ethical questions: what happens when we no longer have a biological body, and essential substances are delivered synthetically or via nanobots to the remaining crucial organs such as the brain and skin? Where is the ethical threshold for deploying such technologies?

8. Photo: Senior Airman Joshua Hoskins VIRIN: 190305-F-HA049-003, used under fair use for educational purposes / The XQ-58A Valkyrie demonstrator, a long-range, high subsonic unmanned air vehicle completed its inaugural flight March 5, 2019 at Yuma Proving Grounds, Arizona. / 88 Air Base Wing Public Affairs / https://www.wpafb.af.mil/News/Article-Display/Article/1777743/xq-58a-valkyrie-demonstrator-completes-inaugural-flight/

9. Photo: © Commonwealth of Australia, Department of Defence, used under fair use for educational purposes / ATS First Flight (Boeing MQ-28) / https://www.boeing.com/defense/mq28#gallery

In the military context, the question of decision-making autonomy for AI-controlled drones arises. For instance, Kratos Defense and Security Solutions is developing the XQ-58A Valkyrie combat drone (image 8) capable of making autonomous lethal decisions. Another advanced Version of this concept of the „loyal wingman“ is Boeing’s MQ-28 (image 9). This is an esclation of the “trolley problem” in the autonomous driving discussion. How do people react to the idea that machines could decide life and death? Again: which ethical boundaries must be set?

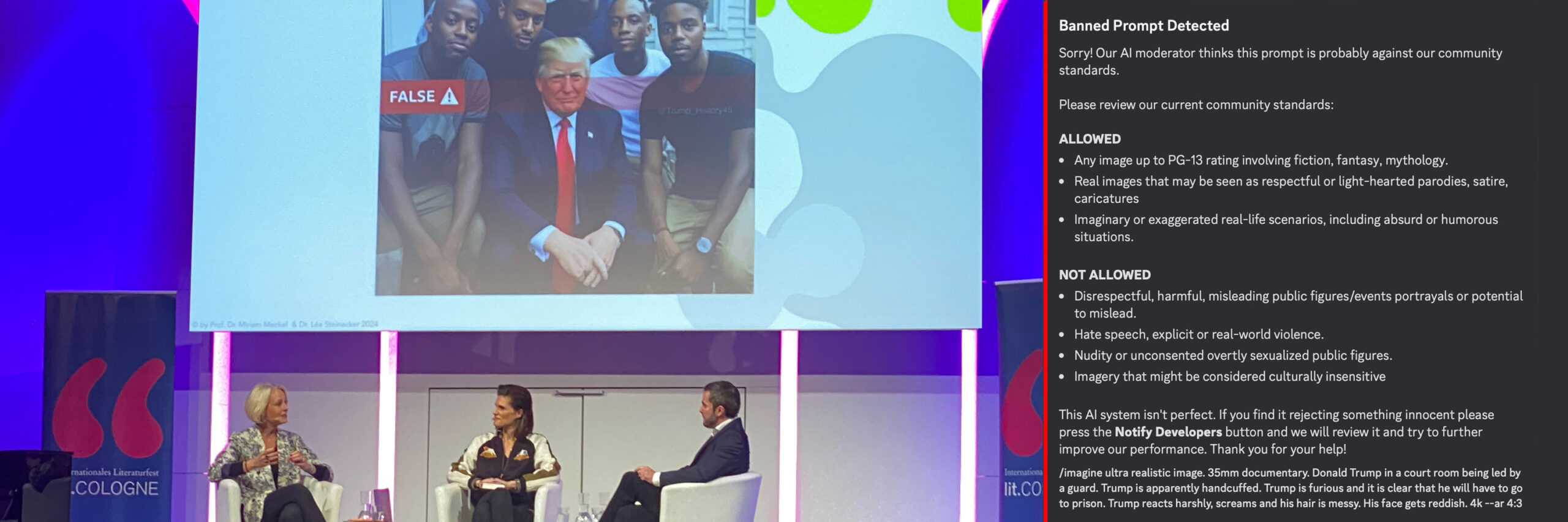

10. Photo: taken by the author during a discussion at Lit Cologne, March 2023. / Dr. Miriam Meckel and Dr. Léa Steinacker explore the impact of deepfakes in political campaigns, showcasing a digitally altered image of Donald Trump.

11. Screenshot: taken by the author, used under fair use for educational purposes, demonstrating Midjourney’s response to a request for generating sensitive content.

A personal experiment with Midjourney, an AI-powered image generation tool, highlighted ethical boundaries. I attempted to create a hyper-realistic depiction of Donald Trump being arrested in a courtroom. However, the platform denied this request, citing its guidelines against creating deceptive or misleading images of public figures. Midjourney’s refusal, captured in a screenshot (image 11), underscores the built-in ethical safeguards that are crucial in preventing the misuse of AI in fabricating politically sensitive or potentially harmful content.

My recent attendance at Cologne Lit, where Miriam Meckel and Léa Steinacker discussed their book „Alles überall auf einmal,“ (image 10) revealed a crucial misunderstanding that seems to be widespread: the discussion adressed the phenomenon of deepfakes, highlighting both the capabilities of technologies e.g., Microsoft’s Vasa-1 to create deepfakes and tools like Sentinel designed to combat them. While the conversation highlighted the implications of deepfakes, it did not address that manipulating truth through technology is not a new phenomenon. For example, Photoshop has long been used to digitally alter images, and the concept of forging money and documents dates back even further.

The real issue transcends the tools themselves; it concerns the state of our society, our educational systems, and our responsibility in using these technologies. This reflection aligns with Nick Bostrom’s insights in „Superintelligence,“ which cautions against the unforeseen powers of AI and the potential risks it poses.

This experience brings to light significant questions about the role of AI and the responsibilities of AI developers to prevent misuse. As AI continues to advance, its ability to generate realistic and potentially misleading content poses both a technological marvel and a societal risk. It highlights the need for robust media literacy and critical thinking in society, ensuring individuals can discern AI-generated content and understand its context. The ethical frameworks guiding AI applications are not just operational necessities but are imperative for maintaining trust in digital content.

As long as there are mechanisms in place to guard us from fakes – are we safe? What are the images that will convince us if something is real? What about current voice/text to image developments like Vasa-1? What about GPT40? When will the touring test be passed?

In my opinion context is always the key issue: With background knowledge of the politics behind the space race and the understanding of the behaviour of physical objects (pendulum, feather & hammer experiment), a moon landing conspiracy becomes very unlikely.

One of the most compelling conspiracy theories in the history of technology and media posits that Stanley Kubrick was involved in faking the 1969 moon landing. This theory, although widely debunked, offers a fascinating lens through which to examine the evolution of human tools and technology—from the first tools used by primates to contemporary advancements in AI.

Kubrick’s filmmaking, particularly the famous match cut in 2001: A Space Odyssey that transitions from a bone tossed into the air to a spaceship, symbolizes humanity’s leap from basic tool use to sophisticated technology. This scene not only highlights the continuity of tool use throughout human history but also sparks a discussion on how tools shape our reality and perception.

Each major development – the invention of writing, the printing press, the internet – has dramatically shifted human society and our ways of interacting with the world. Today, AI represents the latest significant tool. As we integrate AI, we find ourselves at a pivotal moment, potentially on the brink of a new evolutionary path for humanity.

Nick Bostrom (2014) notes, „Gorillas need us to survive,“ highlighting the dependency that can develop between the created and their creators. Will AI remain a tool under human control, or will it evolve into a defining force with its own agency, much like how humans have controlled the fate of other species?

12. A bone-club and a satellite in orbit, the two subjects of the iconic match cut in 2001: A Space Odyssey. / Screen captures from 2001: A Space Odyssey DVD / used under fair use for educational purposes

13. A crab-eating macaque using a stone / Haslam M, Gumert MD, Biro D, Carvalho S, Malaivijitnond S (2013)- Haslam M, Gumert MD, Biro D, Carvalho S, Malaivijitnond S (2013) Use-Wear Patterns on Wild Macaque Stone Tools Reveal Their Behavioural History. PLoS ONE 8(8): e72872. / used under fair use for educational purposes

So what makes us humans susceptible to fakes: missing background knowledge? A lack of trust?

What makes us vulnerable to fakes may indeed be a lack of knowledge or trust. Addressing this through comprehensive research and public engagement can help demystify AI and foster a more informed and trusting relationship with the technology. To this end, conducting surveys across various sectors to gauge public perception and ethical concerns could provide insights into the societal impact of AI.

We should not only adapt to the changes AI brings but also shape them to reflect our collective values, ensuring that we evolve with our creations.

References:

Bostrom, N. (2014). Superintelligence: Paths, Dangers, Strategies. Oxford: Oxford University Press.

Gumert, M.D. (2013) Macaca fascicularis aurea using a stone tool. Photograph. In: Haslam, M., Gumert, M.D., Biro, D., Carvalho, S. and Malaivijitnond, S. 2013. Use-Wear Patterns on Wild Macaque Stone Tools Reveal Their Behavioural History. PLoS ONE, 8(8), e72872. Available at: https://doi.org/10.1371/journal.pone.0072872.g002 (Accessed 22 May 2024).

Kurzweil, R. (2005). The Singularity is Near: When Humans Transcend Biology. New York: Viking.

Meckel, M. & Steinacker, L. (2024). Alles überall auf einmal: Wie Künstliche Intelligenz die Welt verändert und was wir dabei gewinnen können. Hamburg: Rowohlt Verlag.

SXSW (2024) Ray Kurzweil SXSW 2024 Session. [Video] March 10. Available at: https://www.youtube.com/watch?v=i412Ev33Dys (Accessed 10 June 2024).